|

| David Rowe |

|

| Cathy Wilcox |

|

| Peter Broelman |

This blog is open to any who wish to comment on Australian society, the state of the environment or political shenanigans at Federal, State and Local Government level.

On 7 April 2022 the Ministry of Foreign Affairs of the Russian Federation announced that all the then current members of the Parliament of Australia were banned from entering Russian territory.

Presumably because Russian President Vladimir Putin was responding to Australia's sanctions on Russia since 2014 in relation to Russia's aggression towards Ukraine.

This entry ban apparently remains in place for those named sitting MPs and Senators remaining in the Australian Parliament in 2024.

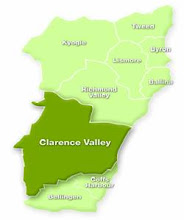

Which means that the NSW Northern Rivers region has two banned MPs - the Members for Richmond and Page.

7 April 2022 18:54

Foreign Ministry statement on personal sanctions on senior officials and MPs of Australia

755-07-04-2022

Obediently following the policy set by the collective West, Canberra has fallen into a Russophobic frenzy and introduced sanctions against Russia’s senior leadership and practically all members of parliament. In response, on April 7, 2022, Russia added to its stop list members of the Australian National Security Committee, House of Representatives, Senate and regional legislative assemblies. They are denied entry into the Russian Federation.

This step comes in response to the unfriendly actions by the current Australian Government, which is prepared to support any actions aimed at containing Russia.

Subsequent announcements will expand the sanctions blacklist to include Australian military, entrepreneurs, experts and media figures who contribute to negative perceptions of our country. We will resolutely oppose every anti-Russia action – from the introduction of new personal sanctions to restrictions on bilateral economic ties, which is doing damage to bilateral economic relations.

Below is the list of Australian citizens who are denied entry into the Russian Federation.

1 Scott Morrison, Prime Minister

2 Barnaby Joyce, Deputy Prime Minister

3 Karen Andrews, Minister for Home Affairs

4 Simon Birmingham, Minister for Finance

5 Patrick Gorman, MP, House of Representatives

6 Luke Gosling, MP, House of Representatives

7 Peter Dutton, Minister for Defence

8 Michaelia Cash, Attorney-General

9 Marise Payne, Minister for Foreign Affairs

10 Joshua Frydenberg, Treasurer

11 Anthony Albanese, MP, House of Representatives

12 John Alexander, MP, House of Representatives

13 Katrina Allen, MP, House of Representatives

14 Anne Aly, MP, House of Representatives

15 Kevin Andrews, MP, House of Representatives

16 Bridget Archer, MP, House of Representatives

17 Adam Bandt, MP, House of Representatives

18 Angie Bell, MP, House of Representatives

19 Sharon Bird, MP, House of Representatives

20 Christopher Bowen, MP, House of Representatives

21 Russell Broadbent, MP, House of Representatives

22 Scott Buchholz, MP, House of Representatives

23 Anthony Burke, MP, House of Representatives

24 Linda Burney, MP, House of Representatives

25 Josh Burns, MP, House of Representatives

26 Mark Butler, MP, House of Representatives

27 Terri Butler, MP, House of Representatives

28 Anthony Byrne, MP, House of Representatives

29 James Chalmers, MP, House of Representatives

30 Darren Chester, MP, House of Representatives

31 Lisa Chesters, MP, House of Representatives

32 George Christensen, MP, House of Representatives

33 Jason Clare, MP, House of Representatives

34 Sharon Claydon, MP, House of Representatives

35 Elizabeth Coker, MP, House of Representatives

36 David Coleman, MP, House of Representatives

37 Julie Collins, MP, House of Representatives

38 Patrick Conaghan, MP, House of Representatives

39 Vincent Connelly, MP, House of Representatives

40 Patrick Conroy, MP, House of Representatives

41 Mark Coulton, MP, House of Representatives

42 Dugald Dick, MP, House of Representatives

43 Mark Dreyfus, MP, House of Representatives

44 Damian Drum, MP, House of Representatives

45 Maria [Justine] Elliot, MP, House of Representatives

46 Warren Entsch, MP, House of Representatives

47 Trevor Evans, MP, House of Representatives

48 Jason Falinski, MP, House of Representatives

49 Joel Fitzgibbon, MP, House of Representatives

50 Paul Fletcher, MP, House of Representatives

51 Nicolle Flint, MP, House of Representatives

52 Michael Freelander, MP, House of Representatives

53 Andrew Gee, MP, House of Representatives

54 Steven Georganas, MP, House of Representatives

55 Andrew Giles, MP, House of Representatives

56 David Gillespie, MP, House of Representatives

57 Ian Goodenough, MP, House of Representatives

58 Helen Haines, MP, House of Representatives

59 Garth Hamilton, MP, House of Representatives

60 Celia Hammond, MP, House of Representatives

61 Andrew Hastie, MP, House of Representatives

62 Alexander Hawke, MP, House of Representatives

63 Christopher Hayes, MP, House of Representatives

64 Julian Hill, MP, House of Representatives

65 Kevin Hogan, MP, House of Representatives

66 Luke Howarth, MP, House of Representatives

67 Gregory Hunt, MP, House of Representatives

68 Edham Husic, MP, House of Representatives

69 Stephen Irons, MP, House of Representatives

70 Stephen Jones, MP, House of Representatives

71 Robert Katter, MP, House of Representatives

72 Gerardine Kearney, MP, House of Representatives

73 Craig Kelly, MP, House of Representatives

74 Matt Keogh, MP, House of Representatives

75 Peter Khalil, MP, House of Representatives

76 Catherine King, MP, House of Representatives

77 Madeleine King, MP, House of Representatives

78 Andrew Laming, MP, House of Representatives

79 Michelle Landry, MP, House of Representatives

80 Julian Leeser, MP, House of Representatives

81 Andrew Leigh, MP, House of Representatives

82 Sussan Ley, MP, House of Representatives

83 David Littleproud, MP, House of Representatives

84 Gladys Liu, MP, House of Representatives

85 Nola Marino, MP, House of Representatives

86 Richard Marles, MP, House of Representatives

87 Fiona Martin, MP, House of Representatives

88 Kristy McBain, MP, House of Representatives

89 Emma McBride, MP, House of Representatives

90 Michael McCormack, MP, House of Representatives

91 Melissa McIntosh, MP, House of Representatives

92 Brian Mitchell, MP, House of Representatives

93 Robert Mitchell, MP, House of Representatives

94 Ben Morton, MP, House of Representatives

95 Daniel Mulino, MP, House of Representatives

96 Peta Murphy, MP, House of Representatives

97 Shayne Neumann, MP, House of Representatives

98 Edward O'Brien, MP, House of Representatives

99 Llewellyn O'Brien, MP, House of Representatives

100 Brendan O'Connor, MP, House of Representatives

101 Kenneth O'Dowd, MP, House of Representatives

102 Clare O'Neil, MP, House of Representatives

103 Julie Owens, MP, House of Representatives

104 Antony Pasin, MP, House of Representatives

105 Alicia Payne, MP, House of Representatives

106 Gavin Pearce, MP, House of Representatives

107 Graham Perrett, MP, House of Representatives

108 Fiona Phillips MP, House of Representatives

109 Keith Pitt, MP, House of Representatives

110 Tanya Plibersek, MP, House of Representatives

111 Charles Porter, MP, House of Representatives

112 Melissa Price, MP, House of Representatives

113 Rowan Ramsey, MP, House of Representatives

114 Armanda Rishworth, MP, House of Representatives

115 Stuart Robert, MP, House of Representatives

116 Michelle Rowland, MP, House of Representatives

117 Joanne Ryan, MP, House of Representatives

118 Rebekha Sharkie, MP, House of Representatives

119 Devanand Sharma, MP, House of Representatives

120 William Shorten, MP, House of Representatives

121 Julian Simmonds, MP, House of Representatives

122 Anthony Smith, MP, House of Representatives

123 David Smith, MP, House of Representatives

124 Warren Snowdon, MP, House of Representatives

125 Anne Stanley, MP, House of Representatives

126 Zali Steggall, MP, House of Representatives

127 James Stevens, MP, House of Representatives

128 Michael Sukkar, MP, House of Representatives

129 Meryl Swanson, MP, House of Representatives

130 Angus Taylor, MP, House of Representatives

131 Daniel Tehan, MP, House of Representatives

132 Susan Templeman, MP, House of Representatives

133 Matthew Thistlethwaite, MP, House of Representatives

134 Phillip Thompson, MP, House of Representatives

135 Kate Thwaites, MP, House of Representatives

136 Alan Tudge, MP, House of Representatives

137 Maria Vamvakinou, MP, House of Representatives

138 Albertus van Manen, MP, House of Representatives

139 Ross Vasta, MP, House of Representatives

140 Andrew Wallace, MP, House of Representatives

141 Timothy Watts, MP, House of Representatives

142 Anne Webster, MP, House of Representatives

143 Anika Wells, MP, House of Representatives

144 Lucy Wicks, MP, House of Representatives

145 Andrew Wilkie, MP, House of Representatives

146 Joshua Wilson, MP, House of Representatives

147 Richard Wilson, MP, House of Representatives

148 Timothy Wilson, MP, House of Representatives

149 Jason Wood, MP, House of Representatives

150 Kenneth Wyatt, MP, House of Representatives

151 Terry Young, MP, House of Representatives

152 Antonio Zappia, MP, House of Representatives

153 Trent Zimmerman, MP, House of Representatives

154 Eric Abetz, Senator

155 Alex Antic, Senator

156 Wendy Askew, Senator

157 Tim Ayres, Senator

158 Catryna Bilyk, Senator

159 Andrew Bragg, Senator

160 Slade Brockman, Senator

161 Carol Brown, Senator

162 Matthew Canavan, Senator

163 Kim Carr, Senator

164 Claire Chandler, Senator

165 Anthony Chisholm, Senator

166 Raff Ciccone, Senator

167 Richard Colbeck, Senator

168 Dorinda Cox, Senator

169 Perin Davey, Senator

170 Patrick Dodson, Senator

171 Jonathon Duniam, Senator

172 Don Farrell, Senator

173 Mehreen Faruqi, Senator

174 David Fawcett, Senator

175 Concetta Fierravanti-Wells, Senator

176 Katy Gallagher, Senator

177 Nita Green, Senator

178 Stirling Griff, Senator

179 Karen Grogan, Senator

180 Pauline Hanson, Senator

181 Sarah Hanson-Young, Senator

182 Sarah Henderson, Senator

183 Hollie Hughes, Senator

184 Jane Hume, Senator

185 Kristina Keneally, Senator

186 Kimberley Kitching, Senator

187 Jacqui Lambie, Senator

188 Sue Lines, Senator

189 Jenny McAllister, Senator

190 Malarndirri McCarthy, Senator

191 Susan McDonald, Senator

192 James McGrath, Senator

193 Bridget McKenzie, Senator

194 Nick McKim, Senator

195 Andrew McLachlan, Senator

196 Sam McMahon, Senator

197 Greg Mirabella, Senator

198 Jim Molan, Senator

199 Deborah O`Neill, Senator

200 Matt O`Sullivan, Senator

201 James Paterson, Senator

202 Rex Patrick, Senator

203 Hellen Polley, Senator

204 Louise Pratt, Senator

205 Gerard Rennick, Senator

206 Linda Reynolds, Senator

207 Janet Rice, Senator

208 Malcolm Roberts, Senator

209 Anne Ruston, Senator

210 Paul Scarr, Senator

211 Zed Seselja, Senator

212 Tony Sheldon, Senator

213 Ben Small, Senator

214 Dean Smith, Senator

215 Marielle Smith, Senator

216 Jordon Steele-John, Senator

217 Glenn Sterle, Senator

218 Amanda Stoker, Senator

219 Lidia Thorpe, Senator

220 Anne Urquhart, Senator

221 David Van, Senator

222 Jess Walsh, Senator

223 Larissa Waters, Senator

224 Murray Watt, Senator

225 Peter Whish-Wilson, Senator

226 Penny Wong, Senator

227 Matthew Guy, legislative assembly member

228 Steve Dimopoulos, legislative assembly member

This was followed by the promised additional banning lists, as it appears that along with the US, UK, Canada, New Zealand, Japan and the EU, Australia continues to irritate Vladimir Putin.

21 July 2022 19:17

Foreign Ministry statement on introducing personal sanctions on representatives of Australia’s law enforcement agencies, border force and defence sector contractors

1514-21-07-2022

In response to the official Canberra’s adoption of sanctions in line with the Australian version of the Magnitsky Act, the Russian Federation has added 39 people from law enforcement agencies, the border force and Australia’s defence sector contractors to the national stop list.

The names of the blacklisted people are as follows:.....

All 39 names can be found on the Russian Foreign Ministry website at

https://mid.ru/en/press_service/spokesman/official_statement/1823204/

21 June 2023 18:24

Foreign Ministry statement on the introduction of personal sanctions against Australian citizens

1217-21-06-2023

In response to the politically motivated sanctions against Russian individuals and legal entities introduced by the Australian government as part of the Russophobic campaign by the collective West, entry to Russia is closed indefinitely for additional 48 Australians from among contractors of the military-industrial complex, journalists and municipal deputies who are creating the anti-Russian agenda in that country. Their names are as follows:.....

All 48 names can be found on the Russian Foreign Ministry website at

https://mid.ru/en/press_service/spokesman/official_statement/1890258/

17 April 2024 11:23

Foreign Ministry statement on personal sanctions on members of Australia’s municipal councils

703-17-04-2024

In response to the politically motivated sanctions imposed on Russian private individuals and legal entities by the Government of Australia as part of the collective West’s Russophobic campaign, the decision has been made to indefinitely deny entry to Russia to 235 Australian nationals who are members of municipal councils actively promoting the anti-Russia agenda in their country. The complete list of individuals affected by this measure follows below.

Given that official Canberra shows no sign of renouncing its anti-Russia position and the continued introduction of new sanctions, we will further update the Russian stop list accordingly.....

All 235 names can be found on the Russian Foreign Ministry website at

https://mid.ru/en/press_service/spokesman/official_statement/1944697/

The Me Too Movement began in the United States around 2006 and in 2017 the #meetoo hashtag went viral when actress Alyssa Milano tweeted ‘me too’ in the United States and in Australia journalist Tracy Spicer invited women to tell their story after the Weinstein scandal broke.

This my friends sums it all up, women’s lives today, tomorrow and everyday 👇🏻#MakeItStop#March4Justice @march4justiceau

— Janine Hendry @_Janine_Hendry on Threads | Insta (@janine_hendry) April 15, 2024

pic.twitter.com/hUP5HKASkl

Now the sexual assaults/rapes......

2022

Sexual Assault Reported To PoliceIn 2019 there were 26,892 victims of sexual assault in Australia, an increase of 2% from the previous year. This was the eighth consecutive annual increase and the highest number for this offence recorded in a single year. After accounting for population growth, the victimisation rate has also increased annually over this eight-year period from 83 to 106 victims per 100,000 persons.

For victims of sexual assault in 2019:

Well this month attention has turned from AI being used to create multiple fake bird species and celebrity images or Microsoft's using excruciatingly garish alternative landscapes to promote its software - the focus has shifted back to AI being used by bad actors in global and domestic political arenas created during election years.

Nature, WORLD VIEW, 9 April 2024:

Political candidates are increasingly using AI-generated ‘softfakes’ to boost their campaigns. This raises deep ethical concerns.

By Rumman Chowdhury

Of the nearly two billion people living in countries that are holding elections this year, some have already cast their ballots. Elections held in Indonesia and Pakistan in February, among other countries, offer an early glimpse of what’s in store as artificial intelligence (AI) technologies steadily intrude into the electoral arena. The emerging picture is deeply worrying, and the concerns are much broader than just misinformation or the proliferation of fake news.

As the former director of the Machine Learning, Ethics, Transparency and Accountability (META) team at Twitter (before it became X), I can attest to the massive ongoing efforts to identify and halt election-related disinformation enabled by generative AI (GAI). But uses of AI by politicians and political parties for purposes that are not overtly malicious also raise deep ethical concerns.

GAI is ushering in an era of ‘softfakes’. These are images, videos or audio clips that are doctored to make a political candidate seem more appealing. Whereas deepfakes (digitally altered visual media) and cheap fakes (low-quality altered media) are associated with malicious actors, softfakes are often made by the candidate’s campaign team itself.

How to stop AI deepfakes from sinking society — and science

In Indonesia’s presidential election, for example, winning candidate Prabowo Subianto relied heavily on GAI, creating and promoting cartoonish avatars to rebrand himself as gemoy, which means ‘cute and cuddly’. This AI-powered makeover was part of a broader attempt to appeal to younger voters and displace allegations linking him to human-rights abuses during his stint as a high-ranking army officer. The BBC dubbed him “Indonesia’s ‘cuddly grandpa’ with a bloody past”. Furthermore, clever use of deepfakes, including an AI ‘get out the vote’ virtual resurrection of Indonesia’s deceased former president Suharto by a group backing Subianto, is thought by some to have contributed to his surprising win.

Nighat Dad, the founder of the research and advocacy organization Digital Rights Foundation, based in Lahore, Pakistan, documented how candidates in Bangladesh and Pakistan used GAI in their campaigns, including AI-written articles penned under the candidate’s name. South and southeast Asian elections have been flooded with deepfake videos of candidates speaking in numerous languages, singing nostalgic songs and more — humanizing them in a way that the candidates themselves couldn’t do in reality.

What should be done? Global guidelines might be considered around the appropriate use of GAI in elections, but what should they be? There have already been some attempts. The US Federal Communications Commission, for instance, banned the use of AI-generated voices in phone calls, known as robocalls. Businesses such as Meta have launched watermarks — a label or embedded code added to an image or video — to flag manipulated media.

But these are blunt and often voluntary measures. Rules need to be put in place all along the communications pipeline — from the companies that generate AI content to the social-media platforms that distribute them.

What the EU’s tough AI law means for research and ChatGPT

Content-generation companies should take a closer look at defining how watermarks should be used. Watermarking can be as obvious as a stamp, or as complex as embedded metadata to be picked up by content distributors.

Companies that distribute content should put in place systems and resources to monitor not just misinformation, but also election-destabilizing softfakes that are released through official, candidate-endorsed channels. When candidates don’t adhere to watermarking — none of these practices are yet mandatory — social-media companies can flag and provide appropriate alerts to viewers. Media outlets can and should have clear policies on softfakes. They might, for example, allow a deepfake in which a victory speech is translated to multiple languages, but disallow deepfakes of deceased politicians supporting candidates.

Election regulatory and government bodies should closely examine the rise of companies that are engaging in the development of fake media. Text-to-speech and voice-emulation software from Eleven Labs, an AI company based in New York City, was deployed to generate robocalls that tried to dissuade voters from voting for US President Joe Biden in the New Hampshire primary elections in January, and to create the softfakes of former Pakistani prime minister Imran Khan during his 2024 campaign outreach from a prison cell. Rather than pass softfake regulation on companies, which could stifle allowable uses such as parody, I instead suggest establishing election standards on GAI use. There is a long history of laws that limit when, how and where candidates can campaign, and what they are allowed to say.

Citizens have a part to play as well. We all know that you cannot trust what you read on the Internet. Now, we must develop the reflexes to not only spot altered media, but also to avoid the emotional urge to think that candidates’ softfakes are ‘funny’ or ‘cute’. The intent of these isn’t to lie to you — they are often obviously AI generated. The goal is to make the candidate likeable.

Softfakes are already swaying elections in some of the largest democracies in the world. We would be wise to learn and adapt as the ongoing year of democracy, with some 70 elections, unfolds over the next few months.

COMPETING INTERESTS

The author declares no competing interests.

[my yellow highlighting]

Charles Stuart University, Expert Alert, media release, 12 April 2024, excerpt:

Governments must crack down on AI interfering with elections

Charles Darwin University Computational and Artificial Intelligence expert Associate Professor Niusha Shafiabady.

Like it or not, we are affected by what we come across in social media platforms. The future wars are not planned by missiles or tanks, but they can easily run on social media platforms by influencing what people think and do. This applies to election results.

“Microsoft has said that the election outcomes in India, Taiwan and the US could be affected by the AI plays by powers like China or North Korea. In the world of technology, we call this disinformation, meaning producing misleading information on purpose to change people’s views. What can we do to fight these types of attacks? Well, I believe we should question what we see or read. Not everything we hear is based on the truth. Everyone should be aware of this.

“Governments should enforce more strict regulations to fight misinformation, things like: Finding triggers that show signs of unwanted interference; blocking and stopping the unauthorised or malicious trends; enforcing regulations on social media platforms to produce reports to the government to demonstrate and measure the impact and the flow of the information on the matters that affect the important issues such as elections and healthcare; and enforcing regulations on the social media platforms to monitor and stop the fake information sources or malicious actors.”

The Conversation, 10 April 2024:

Election disinformation: how AI-powered bots work and how you can protect yourself from their influence

AI Strategist and Professor of Digital Strategy, Loughborough University Nick Hajli

Social media platforms have become more than mere tools for communication. They’ve evolved into bustling arenas where truth and falsehood collide. Among these platforms, X stands out as a prominent battleground. It’s a place where disinformation campaigns thrive, perpetuated by armies of AI-powered bots programmed to sway public opinion and manipulate narratives.

AI-powered bots are automated accounts that are designed to mimic human behaviour. Bots on social media, chat platforms and conversational AI are integral to modern life. They are needed to make AI applications run effectively......

How bots work

Social influence is now a commodity that can be acquired by purchasing bots. Companies sell fake followers to artificially boost the popularity of accounts. These followers are available at remarkably low prices, with many celebrities among the purchasers.

In the course of our research, for example, colleagues and I detected a bot that had posted 100 tweets offering followers for sale.

Using AI methodologies and a theoretical approach called actor-network theory, my colleagues and I dissected how malicious social bots manipulate social media, influencing what people think and how they act with alarming efficacy. We can tell if fake news was generated by a human or a bot with an accuracy rate of 79.7%. It is crucial to comprehend how both humans and AI disseminate disinformation in order to grasp the ways in which humans leverage AI for spreading misinformation.

To take one example, we examined the activity of an account named “True Trumpers” on Twitter.

The account was established in August 2017, has no followers and no profile picture, but had, at the time of the research, posted 4,423 tweets. These included a series of entirely fabricated stories. It’s worth noting that this bot originated from an eastern European country.

Research such as this influenced X to restrict the activities of social bots. In response to the threat of social media manipulation, X has implemented temporary reading limits to curb data scraping and manipulation. Verified accounts have been limited to reading 6,000 posts a day, while unverified accounts can read 600 a day. This is a new update, so we don’t yet know if it has been effective.

Can we protect ourselves?

However, the onus ultimately falls on users to exercise caution and discern truth from falsehood, particularly during election periods. By critically evaluating information and checking sources, users can play a part in protecting the integrity of democratic processes from the onslaught of bots and disinformation campaigns on X. Every user is, in fact, a frontline defender of truth and democracy. Vigilance, critical thinking, and a healthy dose of scepticism are essential armour.

With social media, it’s important for users to understand the strategies employed by malicious accounts.

Malicious actors often use networks of bots to amplify false narratives, manipulate trends and swiftly disseminate misinformation. Users should exercise caution when encountering accounts exhibiting suspicious behaviour, such as excessive posting or repetitive messaging.

Disinformation is also frequently propagated through dedicated fake news websites. These are designed to imitate credible news sources. Users are advised to verify the authenticity of news sources by cross-referencing information with reputable sources and consulting fact-checking organisations.

Self awareness is another form of protection, especially from social engineering tactics. Psychological manipulation is often deployed to deceive users into believing falsehoods or engaging in certain actions. Users should maintain vigilance and critically assess the content they encounter, particularly during periods of heightened sensitivity such as elections.

By staying informed, engaging in civil discourse and advocating for transparency and accountability, we can collectively shape a digital ecosystem that fosters trust, transparency and informed decision-making.

Alfred Lubrano

As the presidential campaign slowly progresses, artificial intelligence continues to accelerate at a breathless pace — capable of creating an infinite number of fraudulent images that are hard to detect and easy to believe.

Experts warn that by November voters in Pennsylvania and other states will have witnessed counterfeit photos and videos of candidates enacting one scenario after another, with reality wrecked and the truth nearly unknowable.

“This is the first presidential campaign of the AI era,” said Matthew Stamm, a Drexel University electrical and computer engineering professor who leads a team that detects false or manipulated political images. “I believe things are only going to get worse.”

Last year, Stamm’s group debunked a political ad for then-presidential candidate Florida Republican Gov. Ron DeSantis ad that appeared on Twitter. It showed former President Donald Trump embracing and kissing Anthony Fauci, long a target of the right for his response to COVID-19.

That spot was a “watershed moment” in U.S. politics, said Stamm, director of his school’s Multimedia and Information Security Lab. “Using AI-created media in a misleading manner had never been seen before in an ad for a major presidential candidate,” he said.

“This showed us how there’s so much potential for AI to create voting misinformation. It could get crazy.”

Election experts speak with dread of AI’s potential to wreak havoc on the election: false “evidence” of candidate misconduct; sham videos of election workers destroying ballots or preventing people from voting; phony emails that direct voters to go to the wrong polling locations; ginned-up texts sending bogus instructions to election officials that create mass confusion.....

Malicious intent

AI allows people with malicious intent to work with great speed and sophistication at low cost, according to the Cybersecurity & Infrastructure Security Agency, part of the U.S. Department of Homeland Security.

That swiftness was on display in June 2018. Doermann’s University of Buffalo colleague, Siwei Lyu, presented a paper that demonstrated how AI-generated deepfake videos could be detected because no one was blinking their eyes; the faces had been transferred from still photos.

Within three weeks, AI-equipped fraudsters stopped creating deepfakes based on photos and began culling from videos in which people blinked naturally, Doermann said, adding, “Every time we publish a solution for detecting AI, somebody gets around it quickly.”

Six years later, with AI that much more developed, “it’s gained remarkable capacities that improve daily,” said political communications expert Kathleen Hall Jamieson, director of the University of Pennsylvania’s Annenberg Public Policy Center. “Anything we can say now about AI will change in two weeks. Increasingly, that means deepfakes won’t be easily detected.

“We should be suspicious of everything we see.”

AI-generated misinformation helps exacerbate already-entrenched political polarization throughout America, said Cristina Bicchieri, Penn professor of philosophy and psychology.

“When we see something in social media that aligns with our point of view, even if it’s fake, we tend to want to believe it,” she said.

To battle fabrications, Stamm of Drexel said, the smart consumer could delay reposting emotionally charged material from social media until checking its veracity.

But that’s a lot to ask.

Human overreaction to a false report, he acknowledged, “is harder to resolve than any anti-AI stuff I develop in my lab.

“And that’s another reason why we’re in uncharted waters.”

Hi! My name is Boy. I'm a male bi-coloured tabby cat. Ever since I discovered that Malcolm Turnbull's dogs were allowed to blog, I have been pestering Clarencegirl to allow me a small space on North Coast Voices.

A false flag musing: I have noticed one particular voice on Facebook which is Pollyanna-positive on the subject of the Port of Yamba becoming a designated cruise ship destination. What this gentleman doesn’t disclose is that, as a principal of Middle Star Pty Ltd, he could be thought to have a potential pecuniary interest due to the fact that this corporation (which has had an office in Grafton since 2012) provides consultancy services and tourism business development services.

A religion & local government musing: On 11 October 2017 Clarence Valley Council has the Church of Jesus Christ Development Fund Inc in Sutherland Local Court No. 6 for a small claims hearing. It would appear that there may be a little issue in rendering unto Caesar. On 19 September 2017 an ordained minister of a religion (which was named by the Royal Commission into Institutional Responses to Child Sexual Abuse in relation to 40 instances of historical child sexual abuse on the NSW North Coast) read the Opening Prayer at Council’s ordinary monthly meeting. Earlier in the year an ordained minister (from a church network alleged to have supported an overseas orphanage closed because of child abuse claims in 2013) read the Opening Prayer and an ordained minister (belonging to yet another church network accused of ignoring child sexual abuse in the US and racism in South Africa) read the Opening Prayer at yet another ordinary monthly meeting. Nice one councillors - you are covering yourselves with glory!

An investigative musing: Newcastle Herald, 12 August 2017: The state’s corruption watchdog has been asked to investigate the finances of the Awabakal Aboriginal Local Land Council, less than 12 months after the troubled organisation was placed into administration by the state government. The Newcastle Herald understands accounting firm PKF Lawler made the decision to refer the land council to the Independent Commission Against Corruption after discovering a number of irregularities during an audit of its financial statements. The results of the audit were recently presented to a meeting of Awabakal members. Administrator Terry Lawler did not respond when contacted by the Herald and a PKF Lawler spokesperson said it was unable to comment on the matter. Given the intricate web of company relationships that existed with at least one former board member it is not outside the realms of possibility that, if ICAC accepts this referral, then United Land Councils Limited (registered New Zealand) and United First Peoples Syndications Pty Ltd(registered Australia) might be interviewed. North Coast Voices readers will remember that on 15 August 2015 representatives of these two companied gave evidence before NSW Legislative Council General Purpose Standing Committee No. 6 INQUIRY INTO CROWN LAND. This evidence included advocating for a Yamba mega port.

A Nationals musing: Word around the traps is that NSW Nats MP for Clarence Chris Gulaptis has been talking up the notion of cruise ships visiting the Clarence River estuary. Fair dinkum! That man can be guaranteed to run with any bad idea put to him. I'm sure one or more cruise ships moored in the main navigation channel on a regular basis for one, two or three days is something other regular river users will really welcome. *pause for appreciation of irony* The draft of the smallest of the smaller cruise vessels is 3 metres and it would only stay safely afloat in that channel. Even the Yamba-Iluka ferry has been known to get momentarily stuck in silt/sand from time to time in Yamba Bay and even a very small cruise ship wouldn't be able to safely enter and exit Iluka Bay. You can bet your bottom dollar operators of cruise lines would soon be calling for dredging at the approach to the river mouth - and you know how well that goes down with the local residents.

A local councils musing: Which Northern Rivers council is on a low-key NSW Office of Local Government watch list courtesy of feet dragging by a past general manager?

A serial pest musing: I'm sure the Clarence Valley was thrilled to find that a well-known fantasist is active once again in the wee small hours of the morning treading a well-worn path of accusations involving police, local business owners and others.

An investigative musing: Which NSW North Coast council is batting to have the longest running code of conduct complaint investigation on record?

A fun fact musing: An estimated 24,000 whales migrated along the NSW coastline in 2016 according to the NSW National Parks and Wildlife Service and the migration period is getting longer.

A which bank? musing: Despite a net profit last year of $9,227 million the Commonwealth Bank still insists on paying below Centrelink deeming rates interest on money held in Pensioner Security Accounts. One local wag says he’s waiting for the first bill from the bank charging him for the privilege of keeping his pension dollars at that bank.

A Daily Examiner musing: Just when you thought this newspaper could sink no lower under News Corp management, it continues to give column space to Andrew Bolt.

A thought to ponder musing: In case of bushfire or flood - do you have an emergency evacuation plan for the family pet?

An adoption musing: Every week on the NSW North Coast a number of cats and dogs find themselves without a home. If you want to do your bit and give one bundle of joy a new family, contact Happy Paws on 0419 404 766 or your local council pound.